Q&A: Who Needs to Upgrade to the NVIDIA RTX 6000 Ada Generation GPU?

"Yes, you should upgrade to the NVIDIA RTX 6000 based on your use case."

Before we can confirm this, there are important questions to address:

- Are the workload improvements of the RTX 6000 compelling enough to justify the cost?

- Who would benefit the most from upgrading to RTX 6000 versus staying with the RTX A6000?

- What are the use cases where the RTX 6000 shines the most?

RAVE’s Product Development team eagerly began testing both the RTX 6000 and RTX A6000 using the compact yet powerful RAVE RenderBEAST™ computer system. RAVE’s flagship compute houses a 13th generation Intel Core i9-13900K CPU, 64GB of DDR5 memory, and a 1TB high-speed M.2 NVMe drive in a form factor the size of a shoe box. “To debut the XR-3, Varjo chose RAVE’s RenderBEAST for the best possible experience,” shared Varjo. “An investment in better hardware can make up for a multitude of software sins.” Whether you’re an enthusiast, pioneering immersive developer, or power user in the world of data science or geospatial computing, the RenderBEAST powers any workload with ease.

"To debut the XR-3, Varjo chose RAVE's RenderBEAST for the best possible experience. An investment in better hardware can make up for a multitude of software sins."

Varjo Tweet

The goal of our testing intended to stress a single GPU system to the max using a commercially available compute-intensive software application.

The goal: Measure under stress. How something responds under stress tells the REAL performance story. We ran the heaviest possible CPU/GPU workload on a standard computer with multiple peripherals and programs running simultaneously to see which card performed better under those stressful conditions. It’s important to note this goal when looking at our results, because you’ll find our testing numbers lower than most other comparison tests. Here are a few things we did to increase workload:

- We tested on standardized compute. Experts know the tricks of the trade to overclock and manipulate systems for optimization. But since most users do NOT do this, we chose not to optimize our test system for the software. In a real-life scenario, optimizing for software would further increase the performance and resulting frames per second (FPS) experienced.

- We tested Microsoft Flight Sim (MSFS), which is known as a rendering hog because it’s difficult to run the software without serious GPU and CPU power. Essentially, MSFS is rendering photogrammetry data to create the massive flying environment. MSFS uses as much CPU/GPU and VRAM as the system has.

- We tested MSFS low to the ground, an important distinction because it wildly increases the images to render, which increases CPU and GPU demand. Many MSFS comparison demos stay high in the sky because there’s less to render when you’re at 30,000 feet and the skies are blue. Staying high will yield artificially high FPS, while staying low drastically lowers FPS due to the increased rending load. To validate this theory, we reran testing while keeping the plane high in the sky, and FPS increased over 90. We purposely ran the plane low to maximize rendering and achieved an impressive 50-60 FPS.

- We tested with Varjo’s XR-3, the most demanding head-mounted-display (HMD) on the market. If you’ve not personally tried an XR-3, you are genuinely missing out on an incredible XR experience. The image quality on the XR-3 will spoil the user for any other HMDs. However, that crystal-clear immersive clarity requires four display ports just for the headset to function: one 1920 x 1920 display plus one 2880 x 2720 display per eye which combined deliver an impressive 70 ppd (pixels per degree). Adding a 4K monitor to display the user experience (common practice in training and simulation scenarios) requires a fifth display. To confirm that the XR3 required heavy rendering processing, we ran the same tests using a competitor HMD and saw an FPS increase similar to the 30,000 test due to the lightened workload. To note, while we gained FPS when switching to a less demanding HMD, the overall quality and experience was significantly less impressive than the XR-3

With our testing parameters in place, we started testing using a mannequin wearing an XR-3, flying low to the ground, with the mannequin head slowly rotating right and left to capture all the beautiful scenery (and ray tracing) below.

The RESULTS and answers to our questions:

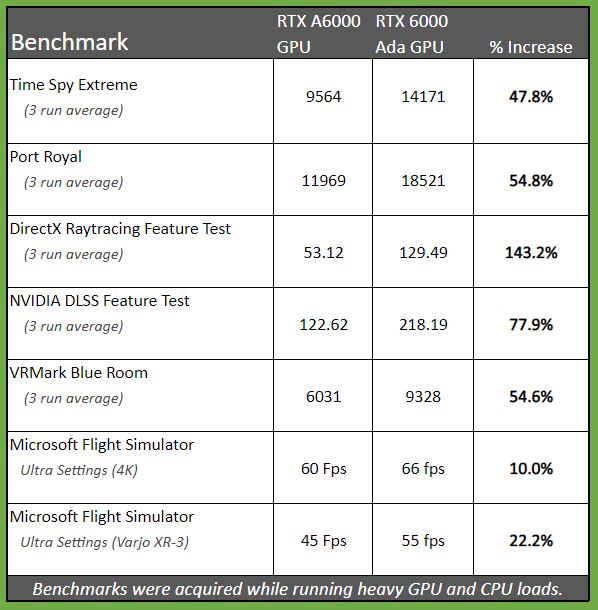

As NVIDIA promised, the results on the RTX 6000 were impressive, with the RTX 6000 strongly outperforming the previous generation RTX A6000 in nearly all areas. The following three benchmarks caught our attention:

- 47.8% increase in the Time Spy Extreme benchmark

- 54.8% increase in the Port Royal benchmark

- 143.2% increase in the DirectX Raytracing feature test

For anyone unfamiliar with those benchmarks, here’s a quick overview of what’s being tested:

- Time Spy benchmark is a system testing application that uses NVIDIA’s DirectX 12 engine to test multiple parts of a system (CPU and GPUs) and assign a weighted-average performance score. Higher scores reflect a higher collaborative system performance. This tool is ideal for testing and comparing GPUs and multi-core processors. (48% increase)

- Port Royal benchmark specifically measures graphics cards. It focuses on real-time ray tracing and traditional rendering techniques to see how the GPU will perform. The mix of techniques reflects how programs will use ray tracing to enhance traditional rendering. (55% increase)

- DirectX Raytracing benchmark measures pure ray-tracing performance. This test compares the performance of the dedicated ray-tracing hardware in the latest graphics cards. (143% increase)

These gains highlight the RTX 6000 is far superior in fields such as gaming, virtual reality, and real-time ray tracing. Simplified, the RTX 6000 is clearly the top performer when every fraction of a second matters. Additional VRed testing of CAD renderings like the image shown on the right indicated a modest 15% increase in render time and quality. Combining the above data, we can draw conclusions based on data trends and start answering questions.

Q: Are the workload improvements of the NVIDIA RTX 6000 compelling enough to justify the cost?

A: Overall, the RTX 6000 will increase performance for the average user. Is that increase worth paying double the cost? That answer is tricky for average users and should be evaluated on a case-by-case basis to account for custom software requirements, peripherals, and workloads.

Specialized users will gain the most from the speed and power of the RTX 6000:

- Video editors can save significant time and increase productivity by taking advantage of single precision performance to edit and export 8K footage in real time.

- Game developers can create more detailed and immersive worlds with real-time ray tracing and AI accelerated workflows using the increased RT Core and Tensor Core performances.

- AI and data scientists can train deep learning models faster and tackle more complex workloads with greater efficiency.

Q: Who would benefit the most from upgrading to NVIDIA RTX 6000 versus staying with the NVIDIA RTX A6000?

A: The speed of the RTX 6000 makes the most compelling use case in training and simulation scenarios. In a tight labor market with pilot shortages, increased training needs, and the ever-present need for medical professionals, having the best, fastest, and most accurate immersive training provides a strong advantage and pathway to success that’s faster, cheaper, and safer than months and years of physical testing. As the economy and labor force continue to decline, immersive training may be the path of the future, especially with such realistic rendering. The total cost investment in the high-performing RTX 6000 for realistic immersive training is invaluable in comparison to injuries and deaths related to training accidents, poor training, or lack of training. In many training scenarios, a fraction of a second can literally save lives. It’s very clear that the realism produced with the best GPU on the market, paired with the best HMD on the market, and powered by the RenderBEAST provides a clear advantage in this use case.

Q: What are the use cases where the NVIDIA RTX 6000 shines the most?

A: Stay tuned! We believe the true power of the RTX 6000 comes when you harness the synergy of multiple cards. To minimize the cost per user, RAVE has started testing vGPUs and putting multiple RTX 6000 together in various immersive environments. By consolidating SWaP we intend to discover how to maximize the number of concurrent immersive users you can have on a single system and maintain a quality training experience. Furthermore, we’re actively conducting R&D to dig into these impacts on real world terrain generation and photogrammetry use cases. We’re expecting the multi-GPU systems to shine and look forward to sharing the results in upcoming blog posts.

To follow our progress, connect with RAVE on LinkedIn, or visit our ‘Contact Us’ webpage to receive our newsletter for quarterly updates or let us know how we can help you achieve your immersive goals.

Ready to upgrade? We’d love to chat. RAVE is a solutions provider that can help you think outside of your tunnel and create an innovative solution that optimizes the NVIDIA RTX 6000 wherever you need it the most. Connect with our experts if you’d like us to test-drive your software, are interested in coming to our office for a demo in our immersive RenderBEAST Zone, or would like to test drive our RenderBEAST immersive bundle in your own environment. Contact RAVE today!

Headquartered in Metro Detroit, RAVE Computer is a woman-owned small business specializing in computer hardware consulting, integration, and support for a variety of professional and government sectors. RAVE is ISO 9001:2015 certified, ITAR certified, and actively pursuing CMMC 2.0 Level 2 certification. RAVE was voted Manufacturer of the Year by Macomb County in 2022 and won Varjo VAR Reseller of the Year for both 2021 and 2022. RAVE has been in business for 35 years and is proud to be an NVIDIA Elite Visualization Partner.